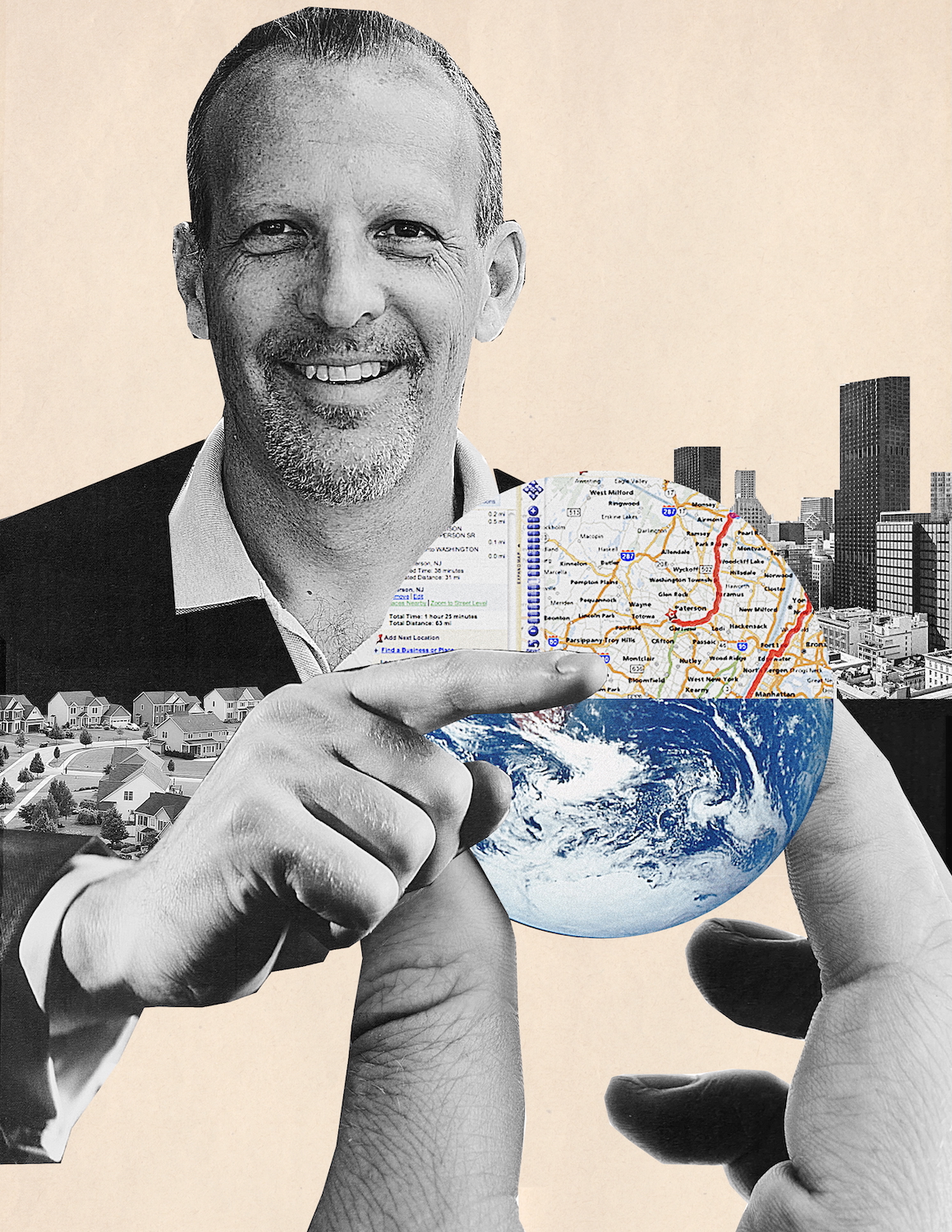

Simon Greenman, co-founder of MapQuest, the original online maps service, talks to Brunswick’s Chelsea Magnant about the opportunities and risks for our AI future.

It was a sublime moment of congruence, with several disparate threads falling into the hands of Simon Greenman. A bachelor’s degree in artificial intelligence had given him a desire to use cutting-edge technology to help humanity. A youthful fascination with geography and an early professional job at Andersen Consulting (now Accenture) beginning in 1990 led to a small company shaping cartography for CD-ROM distribution. Then, excitement at an internet conference in 1995 convinced him that radical change in information distribution was coming.

“I came raving back from the big city in New York to little Lancaster, Pennsylvania and said, ‘We’ve got to try this internet thing out on the maps,’” Co-founder Greenman told us in a recent interview.

All of that came together in the birth of MapQuest. Originally called WebMapper, the revolutionary, interactive internet platform did more than give directions—it powered the popularity of the internet.

“We hit the switch on February 5th, 1996,” he recalls. “I remember we had spent the whole night putting everything together and literally hit the switch at 7:00 in the morning. Suddenly, there was all this traffic coming in. And we didn’t even know from where. We hadn’t advertised it; we hadn’t launched it. But people were finding us. And it exploded.”

It was a simple idea with an outsized impact. MapQuest allowed users to enter an address, see it on a map and print out a set of directions for how to get there.

“It was a ‘wow’ moment; you could see that people suddenly got the power of the internet,” Greenman says. “Something like geography and navigation, it’s really a fundamental human need: Where is it? How do I get there? We’ve been asking those questions since the dawn of society.

“I’d say, ‘Give me the street address where you were born.’ And it would come up with the map—a very crude, pixelated map, but with a cross at their home address. People were just floored.”

The word “viral” was not yet being applied to marketing. But viral was the response. From word-of-mouth, it soon gained the attention of USA Today and other national and global publications.

“It was massive,” Greenman says. “Within days, there was so much traffic coming through our website that we couldn’t handle it. The site basically keeled over.”

After leaving MapQuest in 1998, Greenman served in several other innovation leadership positions and earned an MBA from Harvard Business School. He served for three years as CEO of HomeAdvisor Europe, a local service-locator tool, linking consumers with plumbers, roofers and others. A former four-year member of the World Economic Forum’s Global AI Council and still member of its Expert Network, he is today the co-founder and CEO of Best Practice AI, a management consultancy advising businesses on implementing and overseeing the technology in their practice.

While Greenman brands himself “a techno-optimist,” he is, more than most, poignantly aware of the risks AI poses. We spoke with him about his history, and his hopes and fears for the technology.

Did MapQuest’s success surprise you?

I’ll be honest with you, I haven’t seen a reaction quite like that until recently with ChatGPT—that visceral, emotional response. So, we kind of knew it would be big. We just didn’t know how big it would be, and how quickly it would take off.

The other surprise is how quickly the half-life of innovation became, how quickly MapQuest was replaced. I wasn’t there at the time, but the world moved in. It was out-innovated, out-distributed very quickly. Within half a generation, MapQuest was sort of a brand from American antiquity.

How do you think about the risks of AI?

I’m excited about generative AI, because of the opportunity it has to democratize access to expertise—fundamentally, generative AI reduces the cost of expertise. It helps with cognitive skills. It really helps with knowledge tasks from white collar jobs. It’s going to impact all areas and functions, and it has the opportunity to improve things across all industries.

When people actually start using this technology, we find that their job satisfaction goes up quite significantly. Before they use it, there’s a lot of fear. But once they get involved, and they see that it becomes a copilot to augment their tasks and make a lot of the day-to-day drudgery and friction easier, it improves.

The downside is that the risks are significant. We cannot be passive as a society, and as business leaders, about the impact AI will have. AI has become political and global, geopolitical at this point. The race going on between the US, China and the rest of the world, as to who will dominate this, that’s being discussed in terms of a second nuclear arms race.

We also have ethical and social risks behind AI. If we rely on AI too much, do we, as individuals in society, lose skills like critical thinking, because we outsource our thinking to these machines? Do we lose empathy? Is there risk that this technology is biased against different social groups? Who takes from this and who profits from this?

“We cannot be passive as a society, and as business leaders, about the impact AI will have. AI has become political and global, geopolitical at this point.”

And obviously, economic and workforce risks. Do we risk massive job loss and displacement? The answer is probably. But that does happen with any major technological shift, dating back to the industrial era, so we need to look after that.

Going on from there, technological insecurity risks, weaponization of AI, killer drones and things like that. What about the climate footprint? What about the fact that AI hallucinates and is inaccurate? And then broader macro-level risks: Does it risk undermining democracy when you see deep fakes—when we no longer know what’s real or what’s not?

I could go on forever about it. But there’s a whole litany of risks that we need to take very seriously, and this is why it has become political at the end of the day. I think the EU AI Act, which was introduced earlier this year into law, is quite a sensible approach. It basically says, for high-risk use cases, employment, justice system, immigration and using it in the civil society, that effectively the AI must be audited to make sure it’s safe and fair. I don’t think that’s unreasonable for a society to do.

For companies, what we advise is, “You need to make sure your AI is trustworthy. It needs to be trustworthy to your customers, your consumers, to regulators, to society.” Don’t think of it as a defensive move. Think of it as an offensive move, because you’re making your company more trustworthy for the long term.

How prepared do you think your clients are for the gains and the risks of AI?

Certain industries and certain use cases are already heavily regulated. Financial services, healthcare, they are already used to heavy regulation and are much more sensitized to the use of AI within their organizations. Then there are certain industries that are much less familiar with regulation, and at times, almost blind to the importance of the risks of AI and the need to manage those risks.

We’ve seen some jokes in the legal field, where lawyers will go off and use ChatGPT tools to create briefs that they file in the courts. They don’t realize that this technology is not necessarily a truth engine; it’s a pattern engine that repeats a lot of what it’s seen before. There’s a whole series of issues with using these tools—education around this is definitely needed.

Six, seven years ago at the World Economic Forum, we were going on about the need for responsible AI frameworks and most people just looked at us strangely. Now companies are getting much more familiar. They don’t necessarily know exactly what to do, but they’re starting to be aware that there are risks.

And AI is now becoming increasingly board level, in oversight and risk as well as strategic. It’s becoming both offensive and defensive at the board level.

Given all the risks we talked about, how do we prepare this next generation to navigate living with this technology?

The history of AI is one of constant hype and disappointment. Is it over-hyped at the moment? Are we over-worrying? For the first time, I think we’re not.

I’m still actually much more optimistic than cynical. The exponential increase in data, in compute, in software means the level of innovation, adoption and impact has been huge. It is going to transform the future of business and society. I read a statistic that this year, 92% of undergraduate students in the UK are using AI in some form—a new technology and its already at 92%.

So what can we do? It’s a question I get asked a lot: If a kid’s going to college, what should they learn to prepare themselves for this AI future? I think the first thing is to make the assumption that these tools, these systems are going to be used. You’ve got to become digitally and AI literate and comfortable with always learning new tools as well.

This takes us back to basic concepts—having good leadership and management skills become even more important. These tools are often only as good as how you interact and instruct them. If you ask them lousy questions, you get lousy answers. If you ask them vague questions, you get vague answers.

“It felt like out of nowhere in 2022, ChatGPT launched. ‘Bloody hell,’ was the response of all of us, ‘It has finally happened.’ This is what we’d been dreaming about for all these years.”

People that work really well with these tools have critical thinking skills. They challenge the output, and they iterate, just like you would with more junior colleagues. So those skills of critical thinking, being articulate, being specific are really going to be important in the future. The world’s going to change, so adaptability and flexibility become critical—our softer skills: learning, not being too rigid.

Another thing we talk about is experimentation. We don’t know what these tools are going to do exactly. For the next five to 10 years, we’re going to be experimenting with whatever comes, so get comfortable with running experiments. Think about failure as well, not everything is going to work. I think those softer skills become more and more important.

The other side of it is the crisis for recent university graduates. When you don’t have that traditional career path, when there aren’t many jobs at that junior- or entry-level, what do you do? Coding’s done by computers, junior legal work’s done by computers and there aren’t jobs. How do you learn, how do you get wisdom and insights when you don’t have the experience? How do companies make sure they have career paths for junior people to learn?

It’s such a huge and important topic that I think we haven’t clearly thought it through yet as a society.

Having an undergraduate degree in AI, what has surprised you most in the AI evolution that’s unfolded since then?

I have a WhatsApp group with my fellow graduates, and we actually talk about this. We were sold on the dream that AI could answer all of our questions and expand our knowledge a long, long, long time ago. It would be all-knowing, all-omnipotent. Some people call it artificial general intelligence. We kind of believed in the science fiction vision of what AI would be. But we were disappointed so many times.

Then suddenly it felt like out of nowhere in 2022, ChatGPT launched. “Bloody hell,” was the response of all of us, “It has finally happened.” This is what we’d been dreaming about for all these years.

What’s really surprised those of us with a degree in AI is how damn good it is in terms of synthesizing so much knowledge on such a large scale. It brings expertise on any topic to our fingerprints, to our keyboard. But we also believe it’s still “dumb.” It lacks common sense, along with conceptual and understanding. Apple published a report recently saying that it doesn’t really reason; it just mimics reason. It can be fooled so easy. Emily M. Bender, an academic, called it “stochastic parrots.” It is copying everything it’s read on the internet, every video, every song, every movie, it’s seen it before. Where it doesn’t know the answer, it’ll fill in the blanks. At one level, it’s extraordinarily powerful, impactful and intelligent. At another level, it’s not.

So, the answer is, it’s brilliant, but we’ve still got a long way to go to make it really intelligent and get to artificial general intelligence, which is sort of the holy grail.

Technology is nonlinear. The ideas are often not new, but it’s a question of whether or not the technology is ready.

Technology needs to be valuable, usable and feasible. ChatGPT changed the game: suddenly it was extremely valuable, in terms of being able to answer any question about anything. It was suddenly very usable with an interface. And it was feasible. A lot of technology isn’t wrong necessarily, but it just isn’t hitting all three points.

Look at quantum computing. Everybody’s getting very frothy about that. I just don’t think it’s technically feasible right now, for scale adoption. Quantum technology is inevitable. I just don’t know if it’s five years away. And then, you have to ask, why now? Is it ready for adoption?

Good technology doesn’t necessarily make a good business. Those are different sets of skills. You have to have very good business acumen to go with it, is what I find.

I think for business, the final word is inevitable. This is inevitable. And you need to have plans in place to actually think about how this is going to transform your industry and your business. You need to get ahead of it, otherwise you will be left behind on this transformation curve.

More from this issue

Navigation

Most read from this issue

A Telecom Transformed