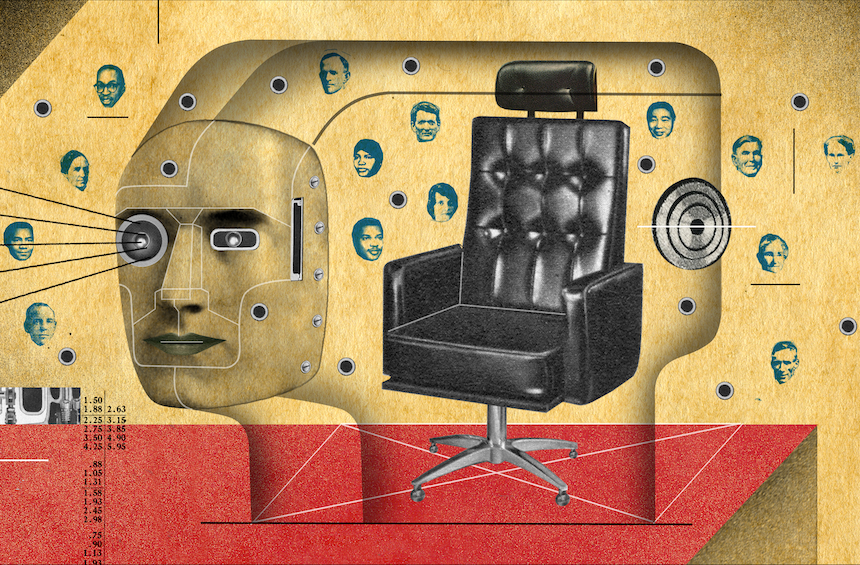

Seven questions every board should answer about AI.

As artificial intelligence continues to transform industries, board members are rushing to understand its implications on business strategy, governance and operations. While AI introduces risks that must be carefully managed, it also offers significant opportunities. Both need to be carefully considered by board directors in the context of their company’s operations.

A recent article posted by Harvard Law School Forum on Corporate Governance spells out this mandate: “The board’s oversight obligations extend to the company’s use of AI, and the same fiduciary mindset and attention to internal controls and policies are necessary. Directors must understand how AI impacts the company and its obligations, opportunities and risks, and apply the same general oversight approach as they apply to other management, compliance, risk and disclosure topics.”

In short, AI is not just the latest piece of helpful office technology, but a critical component of the board’s responsibilities. But these waters are largely uncharted. Where to begin? In the race to harness the promise of AI, what should boards be asking themselves right now? We’ve compiled a list of seven questions designed to introduce clarity and rigor into boards’ initial thinking about AI use.

1. Is the board equipped to oversee AI today?

AI is a complex and fast-evolving technology that demands continuous learning and continuous oversight. It’s crucial for the board to maintain a balance in AI expertise. While the senior leadership team may have deep technical knowledge, the board must ensure it retains independent oversight by being well-versed in AI issues themselves—those of today, and those that may come tomorrow. Relying on management alone risks sacrificing that independent perspective.

Boards must ensure they have a foundational understanding of AI and its potential impact on the business. Then the board must have a plan to stay informed on AI developments. Relying on once-a-year compliance updates is not enough. Does the board have a continuous education program, perhaps through regular updates or bringing in external experts to explain the latest AI trends?

2. Are AI initiatives aligned with the company’s long-term goals?

AI has the potential to transform business operations. Boards must ensure that AI strategies align with the company’s core mission, values and long-term objectives. How do AI initiatives support the company’s broader goals? Are AI-driven decisions helping the business remain true to its core, or could they lead the organization down a path misaligned with its mission?

Boards that succeed in this will ensure AI initiatives are not just reactive or opportunistic, but rather crafted with long-term strategic alignment in mind.

3. How often are we reviewing our strategic plan in the age of AI?

In a world where AI can disrupt entire industries in a matter of months, the traditional “set and forget” approach of setting a five-year strategic plan and reviewing it annually is no longer sufficient. The timing won’t necessarily be the same for every organization, but each board needs to consider how often it is revisiting and revising the company’s strategic plans, at a pace that works best for its business.

The rapid development of AI means that boards must be more agile, regularly reviewing and adjusting the business strategy to account for the new opportunities and challenges AI brings. More frequent strategic planning sessions could be useful.

4. How are we addressing ethical and risk considerations related to AI?

AI raises unique ethical concerns, from bias in decision-making algorithms to the protection of sensitive data. What measures have we put in place to ensure AI is used ethically within the organization? Does our AI policy reflect our commitment to business ethics, and how frequently are we reviewing the AI policy? What are the most sensitive areas within our organization, where AI could potentially create a breach of our ethical guidelines?

AI can also perpetuate biases, especially in areas like product innovation, HR and supply chain management. How are we addressing this risk? Are we confident that our AI systems are being designed and used fairly and transparently? What protocols will need to be followed if a case of algorithmic bias emerges despite our best efforts?

5. How are we ensuring employees understand AI’s risks and responsibilities?

AI doesn’t only impact leadership or technical teams; its implications are organization-wide. Boards must ask whether employees are equipped with the knowledge to use AI responsibly. This question involves ensuring that AI policies are communicated effectively across all levels of the organization, backed by comprehensive training programs. Employees should understand both the opportunities and the risks of AI, and how they are expected to use the technology within the company’s ethical framework.

What steps have we taken to communicate AI policies, and how are we ensuring these policies are being followed? For large companies, a whistleblower or feedback process may need to be established at all levels, to ensure that concerns are brought forward as quickly as they arise. Employees should be encouraged to use such a process and feedback should be fed independently to the board.

6. Are we overseeing AI on audit processes?

AI has great potential to enhance the audit process by processing vast amounts of data quickly, identifying anomalies and helping auditors focus on high-risk areas. The Audit Committee should have a comprehensive understanding of how AI can be used in the external audit process as well as the risks and opportunities that the use of AI can present.

Those risks may include an over-reliance on technology, abdicating the importance of human judgment, and the possibility of AI introducing biases and hallucinations. The Audit Committee must ensure that AI is being used as a tool to support auditors, not replace their professional discretion. What measures are in place to mitigate risks associated with AI in both external and internal audits? How can human oversight be integrated and how will privacy be safeguarded?

7. How are we managing the risk of AI bias and data privacy?

With AI systems relying on vast datasets, the risk of data bias and privacy violations is ever-present. Are we sufficiently managing the privacy implications of AI, particularly as it relates to employee and customer data? This question again points back to knowing which are the most sensitive areas of the business with exposure to AI.

Boards should ensure that AI systems are regularly evaluated for biases, particularly in high-stakes areas like hiring or customer interaction. And of course, AI systems must comply with data privacy regulations. Does the board have confidence that AI policies align with data protection laws, and are we adequately managing the risks of AI in data processing?

AI is rapidly reshaping industries, and boards must find renewed adaptability. By answering these seven questions, boards can help ensure that AI is not only used effectively and ethically but is also aligned with the organization’s long-term strategy and values. In this fast-evolving technological landscape, regular review, education and oversight are essential for boards to take a proactive, informed approach to governance and oversight, and remain effective in their roles.

The levels of AI integration between organizations and across systems are becoming reality, affecting the company in ways the board and executive leadership may not have imagined. This scenario hints at an even larger question: Where do board members’ responsibilities start and end? How can we ready ourselves today to be ready for that tomorrow?

More from this issue

AI Impact

Most read from this issue

The Rise of the Chief AI Officer